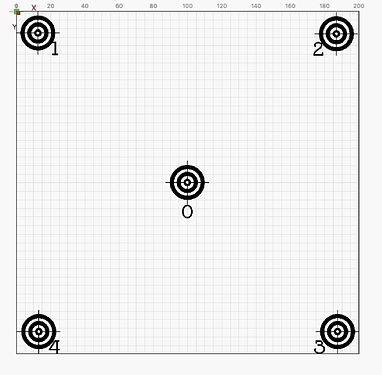

The reason for the “decrease” the area for e.g. 200x200mm is to keep correction smaller.

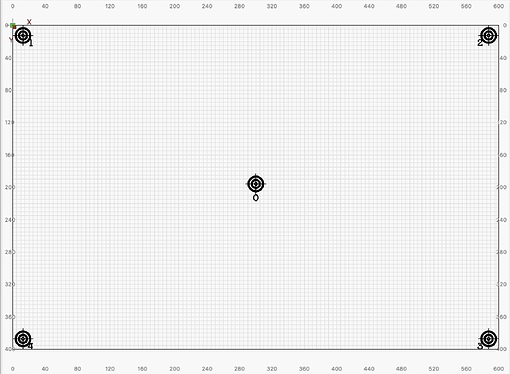

When I calibrate my camera to the entire work surface, which is my standard situation, I have to compromise in relation to error correction of the relatively large area. I start with T0 and hope it does not have a deviation or at least one very small, (+/- Few Tenth of a Millimeter). Then I measure in record 1 to 4. The average value I use for correction. (It requires Camera Alignment and Lense Calibration are in the best possible way before the fine tuning starts.)

When I want extreme account for certain tasks, I only check and adjust the “small” area I want to use, the variation is more manageable and faster compensated.

I have no idea if that’s the right way to do, but it works very well for me, with tolerances down to almost nothing.