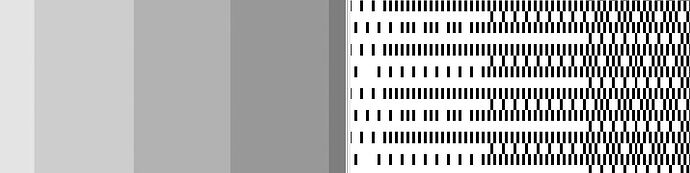

I interpret this to be the opposite. First, let me clarify that the overall appearance as macroscopic, probably 0.25" square- shaded “macropixels” is not relevant. It’s the unusually deep-color appearance within the macropixel. If I did what I call a “standard” fill with line intervals around the width of the beam +/- 50% at various powers, I could not achieve anywhere near the apparent deep color change demonstrated here. The fine honeycomb structure did that. As an added design element which is present but not relevant, the user rotated the 0.25" “macropixel” rectangles by 45 degrees. My apologies if that creates confusion on where the raster motion is happening.

I’ll try to create a more pure example soon that is just regular old shaded squares with no rotation, and not the Mario “8 bit pixel art” of macropixels. I see that this was adding confusion over what’s being discussed.

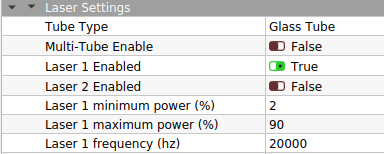

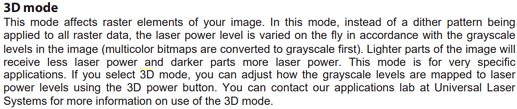

ULS never tries to build a halftoning “dash” (or dot) out of two vertical lines whose length is calculated from the shade of an area twice as tall. I’m certain of that, often it caused trouble as the line interval also had limited increments- 1000, 500, 333.333, 250, and 100 lines per inch.

The ULS setting is probably 250 lines per inch (~0.1mm line interval) and the dash period is also 0.1mm. I’m guessing the shade is 60% here so the dash length is 0.06mm, and in a lighter rectangle that shifts to say 0.03mm wide dashes.

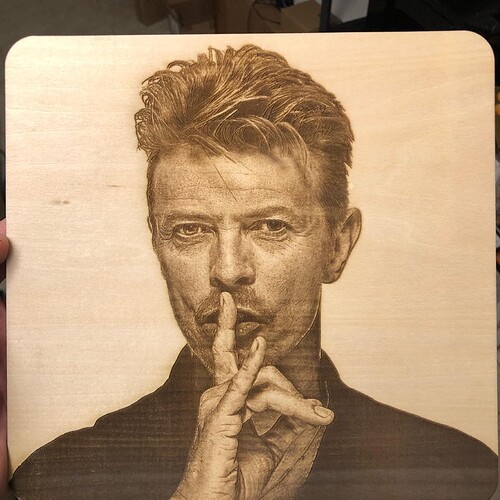

The user was doing this frequently on a ULS machine, and very disappointed that we couldn’t create anything similar on the new system. I’ve gotta say the look they got on the ULS machine was exceptionally high quality, and I’m jealous.

Correct me if I’m misinterpreting this. I see several questions as to the limiting factor here- one, does LB have the ability to do continuously variable-width dashes? I assume the Preview window shows a vertical resolution of 0.10mm, and it appears 4 width quanta make a square on screen. So horizontal is instead broken into a 0.025mm resolution, giving us tall rectangular pixels.

Like I say, I didn’t appreciate how well ULS burned that until now, the appearance is higher quality, and really hoping we can make LB/Ruida do this.

My interpretation of ULS’s halftoning:

The dashes all start at a regular period and that is the same as the line interval, and have a continuously variable width (well, at least enough bit depth to appear so), rather than broken down into pixel quanta at all. The next line is offset by half of a dash period, and the dash period is also fixed as equal to line interval so another way to say the same thing is the offset is half the line interval.

So, the limitations I’m getting is the LB/Ruida system is that it lacks horizontal resolution, and also neither Ordered nor any sort of Halftone/newsprint will do this.

Is this numerically too difficult, and/or would be too much data for the Ruida 6445G? I stepped back and I do see the Preview window might require significant recoding to display correctly if it’s formatting with the horizontal resolution at 4x the vertical.

Oz, you open to external code developers on this? It’s actually an excellent performance mode for lasers I assumed was common, but now I see that’s not the case. I’m realizing this method ULS used isn’t actually “halftoning” as the field defines it, but simple and really exceptionally good for lasers’ raster lines. I can also see a couple of processing options to significantly enhance its capability.